Everyone knows about the Turing Test. It was first proposed by Alan Turing in his famous 1950 paper ‘On Computing Machinery and Intelligence’. The paper started with the question ‘Can a machine think?’. Turing noted that philosophers would be inclined to answer that question by hunting for a definition. They would identify the necessary and sufficient conditions for thinking and then they would try to see whether machines met those conditions. They would probably do this by closely investigating the ordinary language uses of the term ‘thinking’ and engaging in a series of rational reflections on those uses. At least, Oxbridge philosophers in the 1950s would have been inclined to do it this way.

Turing thought this approach was unsatisfactory. He proposed an alternative test for machine intelligence. The test was based on a parlour game — the imitation game. A tester would be placed in one room with a keyboard and a screen. They would carry out a conversation with an unknown other via this keyboard and screen. The ‘unknown others’ would be in one of two rooms. One would contain a machine and the other would contain a human. Both would be capable of communicating with the tester via typed messages on the screen. Turing’s claim was that if the tester could carry out a long, detailed conversation with a machine without knowing whether it was talking to a machine or a human, we could say that the machine was capable of thinking.

For better or worse, Turing’s test has taken hold of the popular imagination. The annual Loebner prize involves a version of the Turing Test. Computer scientists compete to create chat-bots that they hope will fool the human testers. The belief seems to be that if a ‘machine’ succeeds in this test we will have learned something important about what it means to be human.

Popular though the Turing Test is, few people have written about it in interesting and novel ways. Most people think about the Turing Test from the ‘human-first’ side. That is to say, they start with what it means to be human and work from there to various speculations about creating human-like machines. What if we thought about it from the other side? What if we started with what it means to be a machine and then worked towards various speculations about creating machine-like humans?

That is what Brett Frischmann encourages us to do in his fascinating paper ‘Human-Focused Turing Tests’. He invites us to shift our perspective on the Turing Test and consider what it means to be a machine. From there, he wonders whether current methods of socio-technical engineering are making us more machine-like? In this post, I want to share some of Frischmann’s main ideas. I won’t be able to do justice to the rich smorgasbord of ideas he serves up his paper, but I might be able to give you a small sampling.

(Note: This post serves as a companion to a podcast interview I recently did with Brett. That interview covers the ideas in far more detail and will be posted here soon)

1. Machine-Like Properties and the Turing Line

Let’s start by talking about the Turing Line. This is something that is implicit in the original Turing Test. It is the line that separates humans from machines. The assumption underlying the Turing Test is that there is some set of properties or attributes that define this line. The main property or attribute being the ability to think. When we see an entity with the ability to think, then we know we are on one side of the line, not the other.

But what kind of a line is the Turing Line? How should we think about it? Turing himself obviously must have thought that the line was porous. If you created a clever enough machine, it could cross the line. Frischmann suggests that the line could be ‘bright’ or ‘fuzzy’, i.e. there could be a very clear distinction between humans and machines or the distinction could be fuzzy. Furthermore, he suggests that the line could be one that shifts over time. Back in the distant past of AI we might have thought that the ability to play chess to Grandmaster level marked the dividing line. Nowadays we tend to focus on other attributes (e.g. emotional intelligence).

The Turing Line is useful because it helps us to think about the different perspectives we can take on the Turing Test. For better or worse, most people have approached the test from the machine-side of the line. The assumption seems to have been that we start with machines lying quite some distance from the line, but over time, as they grow more technologically sophisticated, they get closer. Frischmann argues that we should also think about it from the other side of the line. In doing so, we might learn that changes in the technological and social environment are pushing humans in the direction of machines.

When we think about it from that side of the line, we get into the mindset needed for a Reverse Turing Test. Instead of thinking about the properties or attributes that are distinctively human, we start thinking about the properties and attributes that are distinctly machine-like. These properties and attributes are likely to reflect cultural biases and collective beliefs about machines. So, for instance, we might say that a distinctive property of machines is their unemotional or relentlessly logical approach to problem-solving. We would then check to see whether there are any humans that share those properties. If there are, then we might be inclined to say that those humans are machine-like. This doesn’t mean they are actually indistinguishable from machines, but it might imply that they are closer to the Turing Line than other human beings.

Here’s a simple pop-cultural example that might help you to think about it. In his most iconic movie role, Arnold Schwarznegger played a machine - the Terminator. If I recall correctly, Arnie wasn’t originally supposed to play the Terminator. He auditioned for the role of one of the humans. But the director, James Cameron, was impressed by Arnie’s insights into what it would take to act as the machine. Arnie emphasised the rigid, mechanical movements and laboured style of speech that would be indicative of machine-likeness. Indeed, he argued with Cameron over one of the character’s most famous lines: ‘I’ll be back!’. Arnie thought that a machine wouldn’t use the elision ‘I’ll’; it would say ‘I will be back’. Cameron managed to persuade him otherwise.

What is interesting about the example is the way in which both Arnie and Cameron made assumptions about machine-like properties or attributes. Their artistic goal was to use these stereotypical properties to provide cues to the audience that they were not watching a human. Maybe Arnie and Cameron were completely wrong about these attributes, but the fact that they were able and willing to make guesses as to what is distinctively machine-like shows us how we might go about constructing a Reverse Turing Test.

2. The Importance of the Test Environment

We’ll talk about the construction of an actual Reverse Turing Test in a moment. Before then, I just want to draw attention to another critical, and sometimes overlooked factor, in the original Turing Test: the importance of the test environment in establishing whether or not something crosses the Turing Line.

In day to day life, there is a whole bundle of properties we associate with ‘humans’ and whole other bundle that we associate with ‘machines’. For example, humans are made of flesh and bone; they have digestive and excretory systems; they sweat and smell; they talk in different pitches and tones; they have eyes and ears and hair; they wear clothes; have jobs; laugh with their friends and so on. Machines, on the other hand, are usually made of metal and silicon; they have no digestive and excretory systems; they rely on electricity (or some other power source) for their survival; they don’t have human sensory systems; and so on. I could rely on some or all of these properties to help me distinguish between machines and humans.

Turing thought it was very important that we not be allowed to do the same in the Turing Test. The test environment should be constructed in such a way that extraneous or confounding attributes be excluded. The relevant attribute was the capacity to think — to carry out a detailed and meaningful conversation — not the capacity to sweat and smell. As Frischmann puts it:

Turing imposed significant constraints on the means of observation and communication. He did so because he was interested in a particular capability — to think like a human — and wanted to be sure that the evidence gathered through the application of the test was relevant and capable of supporting inferences about that particular capacity.

(Frischmann 2014, 16)

Frischmann then goes on:

But I want to make sure we see how much work is done by the constructed environment — the rules and rooms — that Turing built.

(Frischmann 2014, 16)

Quite. The constructed environment makes it a little bit easier, though not too easy, for a machine to pass the Turing Test. It means that the tester isn’t distracted by extraneous variables.

This seems reasonable given the original aims of the Test. That said, some disagree with the original construction and claim that some of the variables Turing excluded need to be factored back in. We can argue the details if we like, but that would miss the important point from the present perspective. The important point is that not only can the construction of the test environment make it less difficult for machines to appear human-like, it can also make it less difficult for humans to appear machine-like. Indeed, this is true of the original Turing Test. In some reported instances humans have been judged to be machines through their conversational patterns.

This insight is crucial when it comes to thinking about the broader questions arising from technology and social engineering. Could it be that the current techno-social environment is encouraging humans to act in a machine-like manner? Are we being nudged, incentivised, manipulated and biased into expressing more machine-like properties? Could we be in the midst of rampant dehumanisation? This is something that a Reverse Turing Test can help to reveal.

3. So how could we construct a Reverse Turing Test?

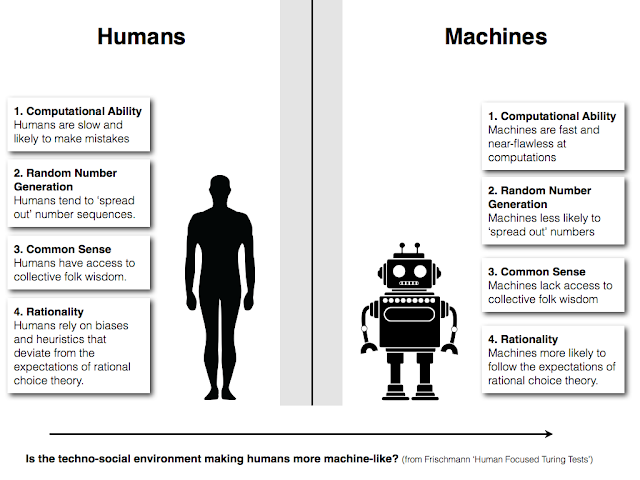

That’s enough set-up. Let’s turn to the critical question: how might we go about creating an actual Reverse Turing Test? Remember the method: find some property we associate with machines but not humans and see whether humans exhibit that property. Adopting that method, Frischmann proposes four possible criteria that could provide the basis for a Reverse Turing Test. I’ll go through them each briefly.

The first criterion is mathematical computation. If there is one thing that machines are good at it, it is performing mathematical computations. Modern computers are much faster and more reliable than humans at doing this. So we could use this ability to draw the line between machines and humans. We would have to level the playing field to some extent. We could assign a certain number of mathematical computations to be performed in a given timespan and we could slow the computer down to some extent. We could then follow the basic set-up of the original Turing Test and get both the machine and the human to output their solutions to those mathematical computations on a screen.

In performing this test, we would probably expect the human to get more wrong answers and display some noticeable downward trend in performance over time. If the human did not, then we might be inclined to say that they are machine-like, at least in this respect. Would this tell us anything of deeper metaphysical or moral significance? Probably not. There are some humans who are exceptionally good at computations. We don’t necessarily think they are unhuman or de-humanised by that fact.

The second criterion is random number generation. Here, the test would be to see how good machines and humans are at producing random sequences of numbers. From what we know, neither are particularly good at producing truly random sequences of numbers, but they tend to fail in different directions. Humans have a tendency to space out numbers rather than clump together sequences of the same number. Thus, using the set-up of the traditional Turing Test once again, we might expect to see more clumping in the machine-produced sequence. But, again, if a human failed this test it probably wouldn’t tell us anything of deeper metaphysical or moral significance. It might just tell us that they know a lot about randomness.

These first two criteria are really just offered as a proof of concept. The third and fourth are more interesting.

The third criterion is common sense. This is a little bit obscure and Frischmann takes a very long time explaining it in the paper, but the basic gist of the idea will be familiar to AI researchers. It is well-known that in day-to-day problem solving humans rely on a shared set of beliefs and assumptions about how the world works in order to get by. These shared beliefs and assumptions form what we might call ‘folk’ wisdom. The beliefs and assumptions are often not explicitly stated. We are often only made aware of them when they are absent. But it is a real struggle for machines to gain access to this ‘folk’ wisdom. Designers of machines have to make explicit the tacit world of folk wisdom and they often fail to do so. The result is that machines frequently lack what we call common sense.

Nevertheless, and at the same time, there are many humans that supposedly lack common sense so it’s quite possible to use these as a criterion in a Reverse Turing Test. The details of the test set-up would be complicated. But one interesting suggestion from Frischmann’s paper is that the body of folk wisdom that we refer to as common sense may itself be subject to technologically induced change. Indeed, a loss of common sense may result from overreliance on technological aids.

Frischmann uses the example of Google maps to illustrate this idea in the paper. Instead of relying on traditional methods for getting one’s bearings and finding out where one has to go, more and more humans are outsourcing this task to apps like Google maps. The result is that they often sacrifice their own common sense. They trust the app too much and ignore obvious external cues that suggest they are going the wrong way. This may be one of the ways in which our techno-social environment is contributing to a form of dehumanisation.

The fourth criterion is rationality. Rationality is here understood in its typical economic/decision-theoretical sense. A decision-maker is rational if they have transitive preferences that they seek to maximise. It is relatively easy to program a machine to follow the rules of rationality. Indeed, the ability to follow such rules in a rigid, algorithmic fashion, is often take to be distinctive property of machine-likeness. Humans are much less rational in their behaviour. A rich and diverse experimental literature has revealed various biases and heuristics that humans rely upon when making decisions. These biases and heuristics cause us to deviate from the expectations of rational choice theory. Much of this experimental literature relies on humans being presented with simple choice problems or vignettes. These choice problems could provide the basis for a Reverse Turing Test. The test subjects could be presented with a series of choice problems. We would expect the machine to output more ‘rational’ choices when compared to the human. If the human is indistinguishable from the machine in their choices, we might be inclined to call them ‘machine-like’.

This Reverse Turing Test has some ethical and political significance. The biases and heuristics that define human reasoning are often essential to what we deem morally and socially acceptable conduct. Resolute utility maximisers few friends in the world of ethical theory. Thus, to say that a human is too machine-like in their rationality might be to pass ethical judgment on their character and behaviour. Furthermore, this is another respect in which the techno-social environment might be encouraging us to become more machine-like. Frischmann spends a lot of time talking about the ‘nudge’ ethos in current public-policy. The goal of the nudge philosophy is to get humans to better approximate the rules of rationality by constructing ‘choice architectures’ that take advantage of their natural biases. So, for example, many employer retirement programs in the US are constructed so that you automatically pay a certain percentage of your income into your retirement fund and this percentage escalates over time. You have to opt out of this program rather than opt in. This gets you to do the rational thing (plan for your retirement) by taking advantage of the human tendency towards laziness. In a techno-social environment dominated by the nudge philosophy, humans may appear very machine-like indeed.

4. Conclusion

That’s it for this post. To recap, the idea behind the Reverse Turing Test is that instead of thinking about the ways in which machines can be human-like we should also think about the ways in which humans can be machine-like. This requires us to reverse our perspective on the Turing Line. We need to start thinking about the properties and attributes we associate with machines and see how human beings might express those properties and attributes in particular environments. When we do all this, we acquire a new perspective on our current techno-social reality. We begin to see the ways in which technologies and social philosophies might encourage humans to be more machine-like. This might be a good thing in some respects, but it might also contribute to a culture of dehumanisation.

If you find this idea interesting, I recommend reading Brett’s original paper.

No comments:

Post a Comment